Dobble is a project that aims to find out if you were painted in a picture. It uses face recognition, embeddings, and similarity search to find out if you are in a picture. The project is based on Face Recognition library and the ChromaDB vector database.

This post points out the main steps of the project. If you want more details and the full code check Code Repository section. Finally, you can find a live demo of the project at Dobble.

Playing with faces

The project main idea is to find out if you are in a picture from the Prado Museum. The Prado Museum has a collection of more than 8,000 paintings, and it is one of the most important art galleries in the world. The initial step is to download the images from the museum and extract the faces from the paintings. To do that, we need to web scrape the museum’s website and download the images. I already did it for you and made the images available at Kaggle.

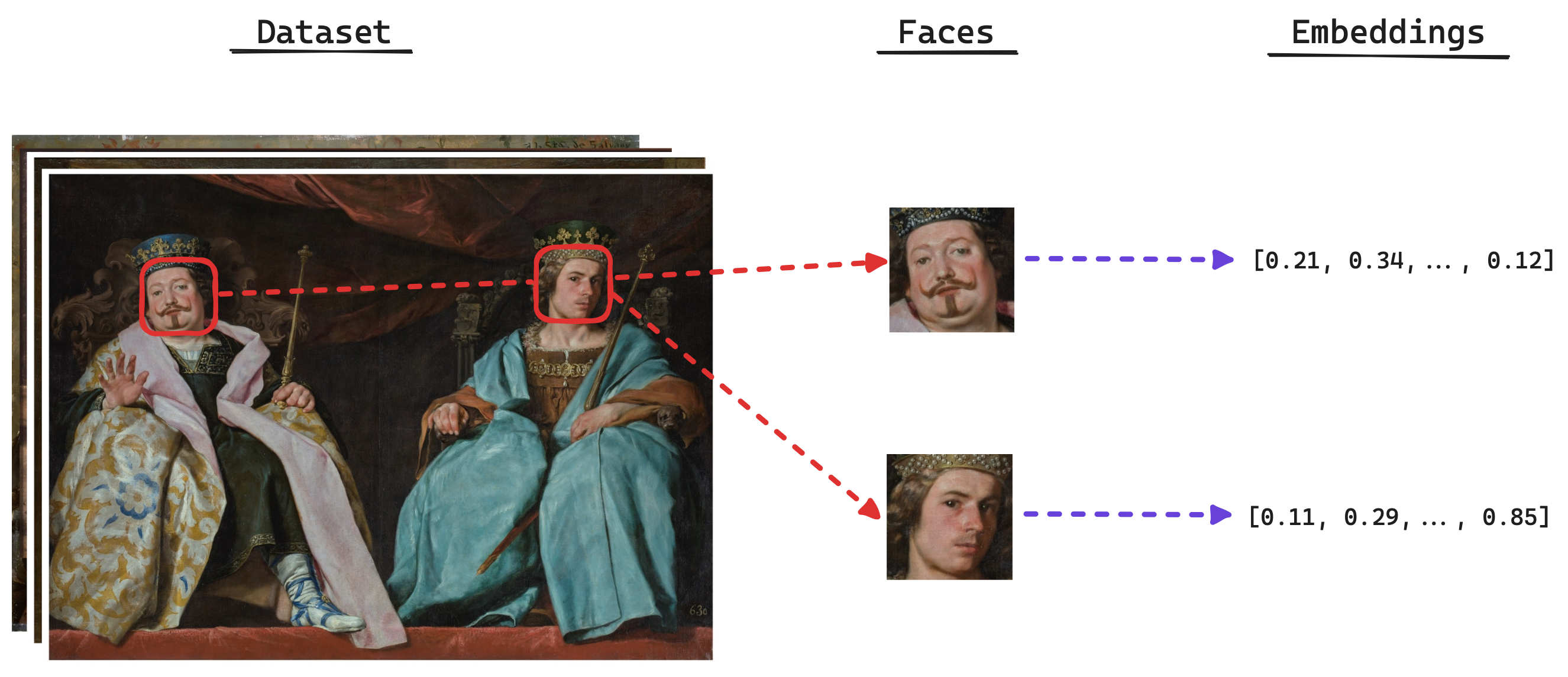

Once we have the data, it is time to extract the faces from the images. Going one step further, we also will need to obtain the embeddings of the faces. The embeddings are a vector representation of the face that we can use to compare the similarity between faces. Similar faces will have similar embeddings.

The face extraction and embedding process is done using the Face Recognition library. We will just need an image and the library will do the rest for us. First, we will obtain the faces from the images, and then we will obtain the embeddings of the faces.

import face_recognition

DETECTION_MODEL = "hog" # hog - cnn

image_path = "path/to/image.jpg"

image = face_recognition.load_image_file(image_path)

faces_locations = face_recognition.face_locations(

image,

model=DETECTION_MODEL,

number_of_times_to_upsample=1

)

faces_embeddings = face_recognition.face_encodings(

image,

known_face_locations=faces_locations,

num_jitters=1,

model="large"

)Once we have the faces and its embeddings, we can store the embeddings in a vector database.

import uuid

import chromadb

from chromadb.config import Settings

client = chromadb.Client(Settings(

chroma_db_impl="duckdb+parquet", persist_directory="faces_prado"

))

client.persist()

collection = client.get_or_create_collection(name="faces_collection")

# Iterate over the faces and store the embeddings

for indx, (face_location, face_embedding) in enumerate(zip(

faces_locations, faces_embeddings

)):

top, right, bottom, left = face_location

width = abs(right - left)

height = abs(bottom - top)

collection.add(

embeddings=face_embedding.tolist(),

metadatas={

"image_id": image_id,

"fl_top": top,

"fl_right": right,

"fl_bottom": bottom,

"fl_left": left,

"fl_width": width,

"fl_height": height,

"image_width": image.shape[1],

"image_height": image.shape[0],

},

ids=[uuid.uuid4().hex]

)And that’s it! We have the faces and its embeddings stored in a vector database.

Find yourself

Now that we have the faces and its embeddings stored in a vector database, we can use it to find out if you were painted. The process is simple, we just need an image with a face and we will compare the face embedding with the embeddings in the vector database.

We will ommit the face extraction and embedding process, as we already did it in the previous step. Now we will just need to compare the face embedding with the embeddings in the vector database.

def find_nearest_face(face_embedding):

"""

Finds the nearest face to the given embedding.

Parameters

----------

face_embedding: np.array

The face embedding to find the nearest face to.

Returns

-------

dict

A dictionary with the nearest face.

"""

# Find the nearest face

nearest_face = collection.query(

face_embedding,

n_results=1,

where={"fl_width": {"$gte": MINIMUM_FACE_WIDTH }},

)

# Check if a face was found

if len(nearest_face) == 0:

print("No face found")

raise ValueError("No face found")

res = nearest_face["metadatas"][0][0]

res["distance"] = nearest_face["distances"][0][0]

res["face_id"] = nearest_face["ids"][0][0]

return resNote how we can establish filters in the query. In this case, we are filtering the faces by the width of the face, as we are not interested in faces that are too small. Finally, we will obtain the nearest face and its metadata.

Code Repository

The web interface is out of the scope of this post, but you can always check the code repository to see the full code. The code repository is available at GitHub. Feel free to use it and contribute to the project.